Imagine this: crystal waters, a sun-drenched deck and the gentle sway of a Phuket boat — the sort of postcard-perfect day that makes you forget your email password. Now imagine someone trying to Photoshop that postcard into evidence for a refund. That’s the drama unfolding in Phuket, and it comes with a modern twist: artificial intelligence.

According to a post on the Facebook page Phuket Times, a group of French tourists who boarded a tour boat in Phuket photographed the vessel inside and out during their trip. Later, the page alleges, those images were edited using AI tools to make the boat look shabby — old, dirty and poorly maintained — and then submitted to a travel agency as proof to justify a refund claim.

Phuket Times shared several of the allegedly doctored images in the comments: an inflatable slide that looks unusually worn and torn, a bathroom snapshot featuring a filthy toilet and scattered rubbish, and a sad-looking banana gone moldy. If these images were authentic, they would be valid reasons to complain. But the plot thickens: among the photos from the same trip was another snap showing three Frenchmen clearly enjoying themselves onboard — suntanned smiles, relaxed posture, and all the vibes of a good day at sea. That image, naturally, muddied the waters of the complaint.

The report didn’t say how much the tourists paid for the trip, nor did it include a statement from the boat operator or the travel agency involved. As of the Phuket Times post, the company had not released an official comment, leaving readers to rely on the photos and the allegation that AI played a role in editing them.

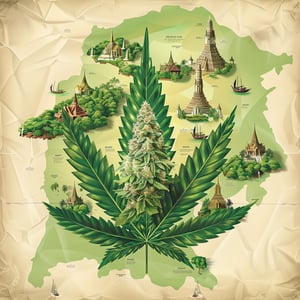

What followed on social media was a predictable but heated mix of outrage, calls for action and storytelling from local businesspeople. Thai netizens urged authorities to investigate and prosecute the tourists if the edits were proven to be fraudulent. The argument was straightforward: concocting evidence damages Phuket’s reputation and tarnishes the broader tourism industry — a vital economic engine for the island.

Local business owners chimed in with their own experiences. One hotelier described guests who booked via an online platform, paid by credit card and then, post-stay, lodged complaints seeking refunds despite the hotel’s services meeting advertised standards. Another hotel operator shared the shocking claim of guests who allegedly left without settling a 3,000 baht bill and later posted a scathing review, even after staying more than ten days. A restaurant owner recounted customers demanding refunds and broadcasting dissatisfaction, despite the establishment’s confidence that their food had been prepared and served properly.

These complaints highlight a growing tension in travel: the balance between genuine consumer protection and opportunistic claims. Travelers absolutely deserve recourse when services fall short. Photographic proof is often persuasive. But what happens when photos can be altered with a few clicks or a simple AI prompt?

AI-driven image editing isn’t just a gimmick anymore. Tools that once required advanced skill are increasingly accessible to anyone with a smartphone and a curious mind. That democratization of technology is brilliant for creativity — and worrying for business owners who may suddenly find their reputations kneecapped by manipulated evidence.

So how should the industry respond? For one thing, travel firms and boat operators need to up their verification game. Time-stamped photos, corroborating witness statements, short onboard videos and consistent pre- and post-trip inspections can help establish facts. Online platforms can also beef up dispute resolution processes, requiring multiple forms of evidence before issuing refunds. Legal systems, too, may need to evolve to address alleged fraud involving AI-manipulated media.

On the flip side, authorities must be careful to preserve legitimate consumer protections. The last thing Phuket — or any tourist destination — needs is a culture where travelers are afraid to complain about genuine problems for fear of being dismissed automatically. Fairness demands a measured response: protect businesses from fraudulent claims while ensuring honest grievances are heard and resolved.

The Phuket case — alleged AI edits and all — is a snapshot of a wider, modern challenge. It’s a story about technology outpacing policy, and about the fragile trust between tourists and hosts. It’s also a reminder that in the age of AI, a picture can be both worth a thousand words and worth a thousand questions.

For now, the story remains an allegation reported by Phuket Times. No official statements or legal actions have been publicly disclosed. If nothing else, the episode should prompt travel providers, platforms and regulators to talk seriously about verification, transparency and the role of AI in disputes. Because whether you’re running a boat in Phuket or sipping coconut water on the beach, the last thing anybody wants is to let a pixelated banana turn into a PR crisis.

Transparency, sensible verification and a dash of common sense will go a long way toward keeping the island’s sunlit charm intact — and ensuring the only thing photos show are good memories, not courtroom evidence.

We published a post about alleged AI-edited photos used to claim refunds on a boat tour in Phuket. The story includes images shared on our page and reactions from locals, but no official statement from the boat operator yet. We expect authorities and platforms to look into verification measures.

If people can just fake photos and get refunds, small businesses will suffer badly. That seems so unfair to honest operators. Platforms must require better proof.

This is what I keep saying — once tech is in the hands of scammers the playing field is gone. But do we really want platforms to make complaining impossible for genuine cases? Tough balance.

We agree it’s a balance; our readers suggested time-stamped photos and short onboard videos as practical steps. The same readers also want easier processes for legitimate complaints.

Time stamps are easy to spoof though. You can embed metadata or add a short live video clip with a gesture to prove authenticity.

Video evidence would kill a lot of fraud, yet many tourists won’t bother. Platforms need incentives and penalties that work.

Local businesses are frightened, and rightly so. Reputation is everything in Phuket, and one viral claim can cost months of bookings.

As someone working in hospitality here, I can say we already check guests more closely, but we can’t police every photo taken after they leave.

Why should locals be the ones to prove a negative? If there’s an allegation, the accuser should prove authenticity beyond a basic image.

That’s logical, but in practice platforms often side with the cardholder to avoid chargeback headaches. The system is biased.

Also remember AI tools can now age or dirty items convincingly. Do we criminalize every edited image? Where to draw the line?

Legal frameworks need to address intent. Satirical edits are different from edits submitted as evidence in a financial dispute.

This sounds like a modern-day he-said-she-said but with Photoshop. People should be punished if proven fraudulent. Simple.

Simple but not simple — proving fraud requires resources. Many small operators can’t hire forensic experts to debunk manipulated photos.

Then platforms should fund the verification if they force the refund process. They make the rules, they pay the cost.

And what about freedom to complain? If platforms are too strict, real victims will be muzzled.

I work in consumer rights and I worry both sides are right. Consumers need protection, but evidence standards must rise with technology. Education for both tourists and businesses is key.

As a lawyer, I recommend platforms implement multi-factor verification in disputes and make AI-forensics accessible. Courts will adapt slowly otherwise.

Exactly. And regulators should set minimum verification tech standards for high-risk sectors like travel.

I’m skeptical of tourists who take ‘before’ and ‘after’ pics excessively. Sometimes the goal is a refund, not truth. That said, people do have real problems sometimes.

I got a refund once for a horrible Airbnb and used time-stamped photos. I wasn’t trying to scam anyone, just documented poor hygiene.

That’s fair. My point is about intent and scale — one genuine case doesn’t negate organized abuse.

Also cultural expectations differ; what one tourist calls ‘dirty’ another sees as ‘normal’ in a budget service. Context matters.

Technically, detecting AI edits requires forensic analysis of image inconsistencies and metadata, but many casual edits leave traces. Investment in detection tools will grow.

Great, but who pays for that tech? The platform, the business, or the customer? The money has to come from somewhere.

Ideally platforms subsidize basic checks and reserve paid forensic services for contested high-value claims.

Subsidize? Platforms already take big fees. They can afford to invest in dispute resolution instead of issuing quick refunds to avoid chargebacks.

As a tourist, I feel nervous now. I don’t want to be accused of lying if I complain about a real problem. This will make honest complaints harder.

Tourists should keep receipts and short videos. Most issues are easy to prove with a 20-second clip showing the problem and a timestamp.

Local businesses are losing trust in international guests because of stories like this. It’s not just refunds — it’s threats to livelihoods. We need swift local action.

Enforcement should be balanced. Quick arrests or witch hunts will scare tourists away too, and that’s bad for everyone.

If the edited images are proven fake, prosecute for fraud. But make sure the investigation is transparent so tourists know the process.

This is a PR disaster waiting to happen. One viral thread accusing Phuket of dirty boats will cost more than any single refund.

We try to report responsibly and note these are allegations with no official statements yet. But public perception moves fast online.

PR or justice — both matter. But how do you prove intent without spending a fortune on image forensics?

Sometimes I think we should ban photo evidence completely unless recorded via platform tools during the service. Then at least there’s a chain of custody.

That would be extreme and invasive. People value privacy, and forcing platform-recorded media could be a privacy nightmare.

A middle ground: optional platform-verified uploads with incentives like quicker dispute resolution for verified submissions.

I run a small dive boat and we’ve had two chargebacks in five years, both clearly fraudulent. It’s demoralizing and banks rarely back us. Something must change.

Banks side with cardholders to reduce their own risk. That’s why platform-level verification is key, not just business protests.

Financial institutions could require stronger evidence for disputed transactions linked to services. Policy change is needed upstream.

I keep commenting because this topic matters. More transparency, better tech, and fairer platform rules would help. It’s not impossible.

You’ve been active and your points are consistent. But what about small tourists who don’t know about verification steps? Education campaigns could help.

Agreed. Tourist education + platform features = fewer false claims and fewer genuine complaints ignored.

As a traveler I expect to be heard. But I also dislike scammers. Maybe travel insurance companies could help verify claims and mediate disputes.

Insurers could play a role, but they add cost. Still, for high-value bookings an insurer-mediated claim could be a reasonable extra layer.

Local police should be trained in digital forensics. If claims are fraudulent, there should be consequences to deter this behavior.

Several readers called for police training and faster local investigations; we’ll follow up with requests for official comment from authorities.

Training is good, but the process must be impartial and timely. Slow investigations don’t help businesses or tourists.

This is a global problem, not just Phuket. AI editing will upend many industries unless verification evolves quickly.

Correct. We need international standards for digital evidence and cooperation between platforms, law enforcement, and consumer groups.

Don’t forget cultural nuance — a moldy banana may be a sign of poor care or just a single garbage moment. Context and corroboration matter.

Context matters, but so does consumer safety. If something is actually unsanitary, people should be able to speak up without being gaslit.

This will be solved by tech eventually: better provenance, embedded watermarks, verified uploads. Until then it’s messy and unfair to many.

I hope solutions respect privacy and accessibility. Not everyone can use fancy verified tools while traveling.

I know tourists who got refunds for legitimate issues; they weren’t liars. But the rise of easy image editing makes me skeptical of any single photo as proof.

Then platforms should require multiple evidence types: photos, brief videos, timestamps, and vendor statements before awarding refunds.

Yes, multi-evidence is sensible and protects both sides.

I think public shaming on Facebook is dangerous. Accusations of fraud can ruin reputations before any proof is presented. Responsible journalism matters.

We strive for responsible reporting and note allegations and lack of official statements. But social media often amplifies and accelerates outrage.

True, but social platforms are often where customers first vent. Companies need to respond quickly and transparently to counter misinformation.

We need consumer protection AND anti-fraud measures. Framing it as one or the other is false. Both can coexist with the right systems.

Well put. The policy challenge is integrating both goals without creating perverse incentives for either side.

Has anyone checked if the tourists had a history of claims? Platforms could flag repeat complainers for review.

Platform-side flags help, but they can also be abused or biased. Transparency about criteria would be necessary.

Funny how technology that was supposed to empower creativity is now empowering fraud. Not surprised but disappointed.

Tech is neutral; humans decide usage. Education, laws, and platform design steer outcomes more than the tools themselves.

I just want honest travel experiences documented. Maybe platforms should let vendors upload their own verified photo galleries before trips so customers know what to expect.

We do that for many operators. Pre-trip galleries plus short post-trip checks could prevent many disputes.

Good point — several comments suggested verified vendor galleries and scheduled inspections as preventative measures.

Prosecute clear fraudsters, educate tourists, and require stronger evidence for refunds. Problem solved. Well, mostly.

Reality is never tidy, but that framework is a decent start. Implementation is where it gets messy.

What worries me is the chilling effect on genuine whistleblowers. If complaining gets you labeled a criminal, people will stay silent about real dangers.

Exactly. Any system must protect those raising legitimate safety concerns while filtering bad-faith actors.

I’m impressed by reader suggestions here. Time-stamped video, platform-verified uploads, and impartial forensics together could form a robust approach.

Those are good steps. The harder part is global enforcement and making these tools accessible to budget travelers.

One-sentence thought: make verified uploads free and fast, and ban anonymous claims without them. That would reduce noise instantly.

But some guests might not have access to the platform at the time of incident. Flexibility matters for fairness.

Then allow delayed verified uploads within a set window, with stronger scrutiny after that window closes.